Deepesh Rastogi

2024-05-03 04:04:57

2024-05-03 04:04:57BLOG VIEWS : 122

Navigating the Lifecycle of a Generative AI Project: From Conception to Application

-

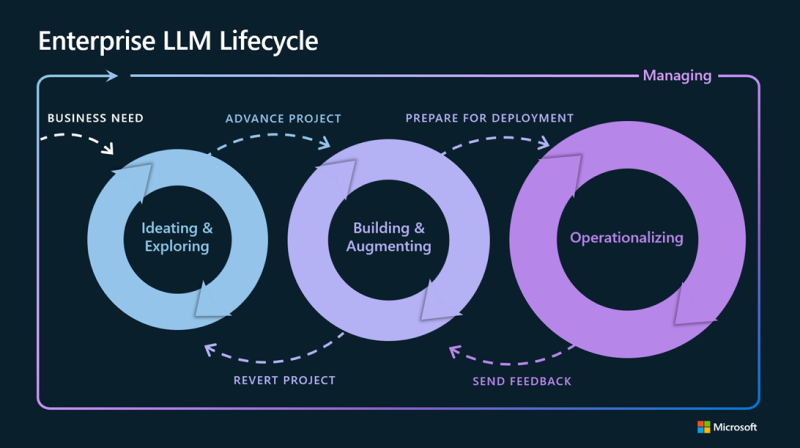

The advent of generative AI has ushered in a new era of innovation, driving efficiency and creativity across various fields. However, the journey from the initial idea to a fully functional AI application is intricate and requires a structured approach. This article explores the lifecycle of a generative AI project, delineated into four critical phases: Defining Scope, Selection of Model, Adaptation & Alignment of Model, and finally Application Integration.

Tech Stack to be used

- Python: Serves as the primary programming language due to its rich ecosystem for AI and data science. It's used for scripting the initial setup, including API calls and data handling.

- Jupyter Notebook: Offers an interactive environment for prototyping and testing initial ideas, allowing for a seamless transition from concept to code.

- Transformer from Hugging Face: Provides access to a vast repository of pre-trained models and tools. At this stage, choosing between an existing LLM model or starting anew is critical. For projects demanding customization, Hugging Face’s Transformers library is instrumental in selecting and downloading the right foundational model.

- FLAN-T5-Base LLM Model: As a specific selection, the FLAN-T5 model, known for its adaptability across different tasks without extensive fine-tuning, could be an ideal starting point for a range of generative tasks.

- PyTorch: As the underlying framework for Hugging Face’s Transformers, PyTorch facilitates model fine-tuning and adaptation. Its dynamic computational graph enables more nuanced model adjustments and experimentation.

- Prompt Engineering & Supervised Learning: Leveraging Python and PyTorch for prompt engineering and dataset preparation, developers can refine the model's understanding and output quality.

- Reinforcement Learning from Human Feedback (RLHF): Python scripts, supported by PyTorch’s flexible architecture, allow for the implementation of reinforcement learning techniques to align the model’s outputs with human preferences and ethical guidelines

- AWS SageMaker: In the deployment and integration phase, AWS SageMaker becomes crucial. It offers a managed platform for deploying machine learning models at scale, ensuring the model is optimized for performance and is readily accessible. SageMaker’s capabilities in monitoring, A/B testing, and auto-scaling support the operationalization of the AI model within real-world applications.

Phase 1: Defining Scope

The foundation of a successful generative AI project lies in clearly defining its scope. This entails identifying the specific problem the project aims to solve, whether it be essay writing, summarization, translation, or information retrieval. Once the scope is established, the next step involves invoking the appropriate API to take necessary actions, setting the stage for the project's development path.

Phase 2: Selection

After outlining the project's objectives, the focus shifts to selecting the core technology. Here, developers must decide between utilizing a pre-existing Large Language Model (LLM) or embarking on the complex journey of training their model from scratch. This decision hinges on factors like project requirements, resource availability, and desired customization levels, guiding the project's technical foundation.

Phase 3: Adaptation & Alignment

With the core model selected, the next phase, Adaptation & Alignment, involves tailoring the AI to meet specific project needs. This is where prompt engineering comes into play, leveraging in-context learning to guide the model's understanding and responses. Further refinement is achieved through supervised learning, fine-tuning the model based on curated datasets. The alignment process involves integrating human feedback through reinforcement learning, ensuring the model's outputs align with desired outcomes and ethical standards. Evaluating the model's learning outcomes is crucial at this stage to ensure readiness for practical application.

Phase 4: Application Integration

The final phase is where the project comes to life through Application Integration. This involves optimizing and deploying the model for operational efficiency and integrating it into LLM-powered applications. Developers must navigate challenges related to scalability, performance, and user experience, ensuring the AI's capabilities are seamlessly embedded within practical applications, augmenting their functionality and value.

Conclusion

The lifecycle of a generative AI project is a journey of meticulous planning, development, and refinement. Each phase, from defining the project's scope to integrating the AI into real-world applications, requires careful consideration and expertise. By adhering to this structured approach, developers can navigate the complexities of generative AI projects, unlocking innovative solutions that harness the power of artificial intelligence to address diverse challenges and opportunities.

#GenerativeAI, #AIProjectLifecycle, #MachineLearning, #AIdevelopment, #Python, #PyTorch, #HuggingFace, #TransformerModels, #FLANT5, #JupyterNotebook, #AWSSageMaker, #AIDeployment, #ModelTraining, #PromptEngineering, #ReinforcementLearning

- AI-ML

- All